Feature article

by Josu Urquidi

How software development with containers and microservices will continue to disrupt the industry and pose threats to companies like IBM. “Software is eating the world” – the famous phrase, coined by legendary founder and investor Marc Andreessen, seems now to be more relevant than ever. Software has transformed the way we travel, communicate, work and pretty much everything else.

How did we get here? Let’s start with some background. The first computers emerged in the 60’s and they were big, chunky, and only affordable to large institutions such as governments or universities. Soon after that, researchers started working on tools for sharing computing time, as every clock cycle was precious and expensive. That’s when IBM (Big Blue) developed CP-40, the first virtualisation software that allowed multiple users to do different things on the same machine. Around that time, Intel was born, and with it the integrated circuit (the 4004). Intel’s founder Gordon E. Moore made the prediction that computing performance would double every year (he rectified this later to every two years).

In the 80’s, Big Blue hit again with the personal PC, which democratised computing. It was based on Intel’s x86 architecture. Because PCs were so affordable (compared to mainframes), companies could deploy each application on dedicated hardware. Virtualisation efforts consequently stopped for nearly two decades, but then Moore’s law hit again. The x86-based chips became so powerful, that a single application wasn’t able to take full advantage of the available power. That’s where the potential of running virtual machines on PC hardware was recognised. Companies like VMWare, inspired by IBMs virtualisation efforts for mainframes, began to commercialise virtualisation solutions on commodity hardware, and even open-source initiatives like the Xen project (and later its commercial siblings from Oracle and Citrix) emerged.

We are now a step further; IT infrastructure is deployed on-demand (as a service) and computing capacity is sold on a pay-per-use model. What was prohibitively expensive years ago now costs just a tiny fraction on Amazon’s cloud. Capex becomes opex.

One cannot help but wonder what’s going to happen next. How can one make best use of all the power that Moore’s law will bring in the next decade? Will virtualisation be as important as it is today? A look at the industry’s trends reveals that innovation is far from decelerating. Here are my thoughts:

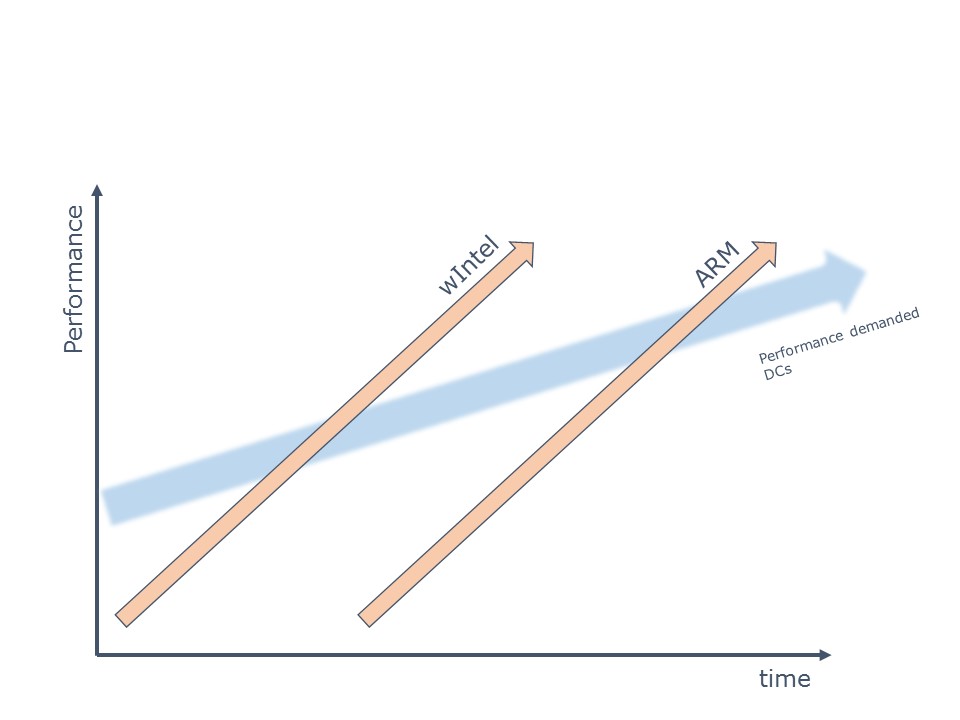

First, mobile components will invade the data center as commodity hardware. If you happen to own a data center, you would know that a large part of the monthly bill comes from powering the servers and keeping them cool. What if you could run all your services on a low-power chip that needed no cooling? The running costs of a data center could be dramatically reduced. Well, it just so happens that those chips already exist, and chances are that you are reading this article on a device powered by such a chip. The ARM architecture, which is what smartphone chips are built upon, offers great power at low wattages, and requires no dedicated hardware, just off-the-shelf smartphone parts.

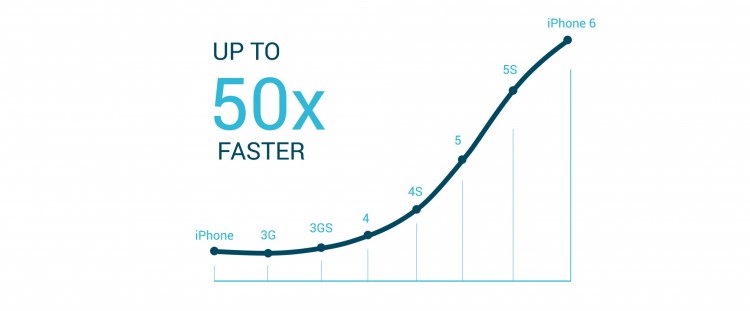

Moore’s law delivers faster performance year after year. (Adapted from Apple)

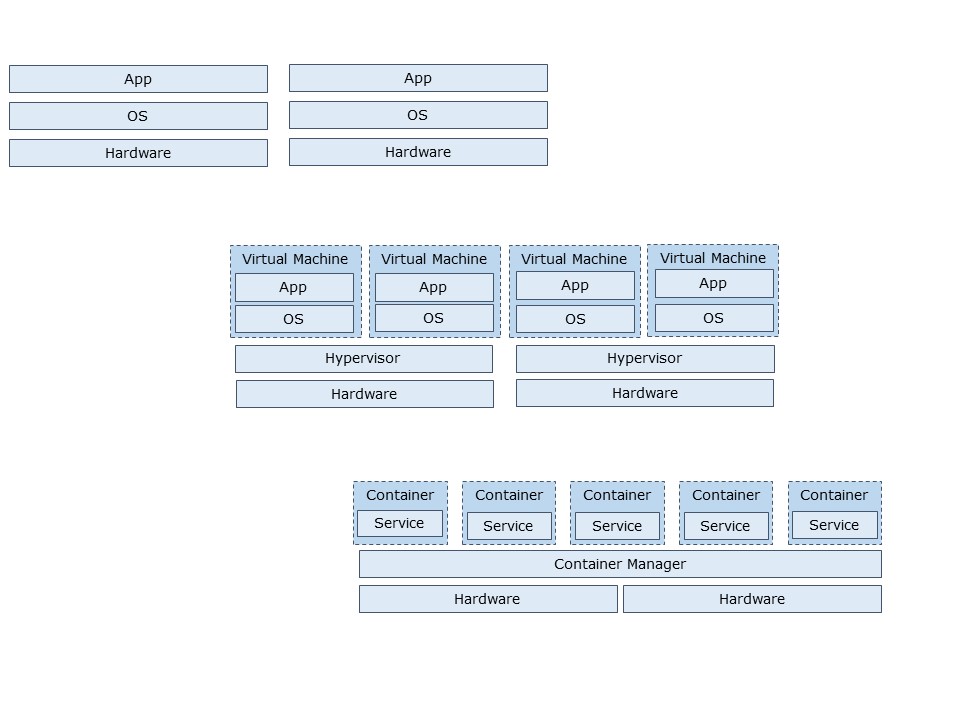

Furthermore, virtual machines will be replaced by containers and microservices. Today, individual servers are partitioned to host different virtual machines, in which different operating systems and monolithic applications can run. Tomorrow, hardware performance will be aggregated into a single pool of shared resources, distributed across several servers, farms or even data centers. Individual microservices (instead of whole applications) can run inside isolated containers. The advantages? Higher scalability, better isolation and near bare-metal performance thanks to a smaller footprint.

Containers have a smaller footprint, as there’s no need to boot a whole OS for each app

So, what does all this techy-talk mean? It means that we might observe what Clayton Christensen calls Disruptive Innovation.

Disruptive innovation describes the pathway of a new entrant into a market dominated by established companies. The new entrant offers a product that is worse than the incumbent’s product, but cheaper and simpler. Such ‘good enough’ products appeal to the less demanding customers that are being over-served with the products of the incumbents. As performance supply evolves quicker than demand, new entrants are soon able to satisfy even the high end of the market, effectively disrupting the incumbent’s territory.

Disrupters enter the market from below

Disruptive innovation is already happening. Baidu, China’s internet giant, already runs some services on ARM machines. Facebook and Amazon are experimenting with smartphone components in their back-end operations as well. Open Source initiatives like OpenStack, a cloud platform, enable everyone to build a private cloud without the hassle of proprietary systems, and it supports ARM. Docker, the leader in containers, recently joined the unicorn club and is also open source. The pieces needed to undercut the big players away are already in place.

The move towards ARM, containers and open source is a potential gift for the entrepreneur. As the industry moves to freely available components, both physical and virtual, the barriers of entering the IT industry are significantly reduced. Engineers can develop innovative solutions without investing in expensive hardware or licenses. Think of the German Mittelstand – small and medium companies often referred to as the backbone of Germany’s economy. These firms most likely want to move to the cloud, but they don’t have the resources to deploy a private cloud or they have trust issues about having their data being stored in a public cloud (this is especially true in Germany). But with this new paradigm, small and medium enterprises can afford to build and deploy private, scaleable environments.

The coming times are going to be challenging for Big Blue and their peers. In a future where hardware is a commodity and open source is the standard, is there still place for Microsoft? Cisco? Intel? Tech giants are navigating troubled waters, and only time can tell if they will reach safe harbour.

This article has previously been published by Motius GmbH, Munich in their Motius Talent Pool Writes series .